CompImg

Focus Stacking – Investigating Various Approaches

by Manuel Gonzales & Lukas Giehl

27.03.2024

This project is about implementing and comparing various algorithms of focus stacking currently in use. It was done as part of the Computational Imaging course by Professor Ihrke in the 2023/24 winter semester at the University of Siegen. We present results from the following algorithms:

- Laplacian

- Real wavelet

- Laplacian Pyramid

- Complex wavelet

What is focus stacking?

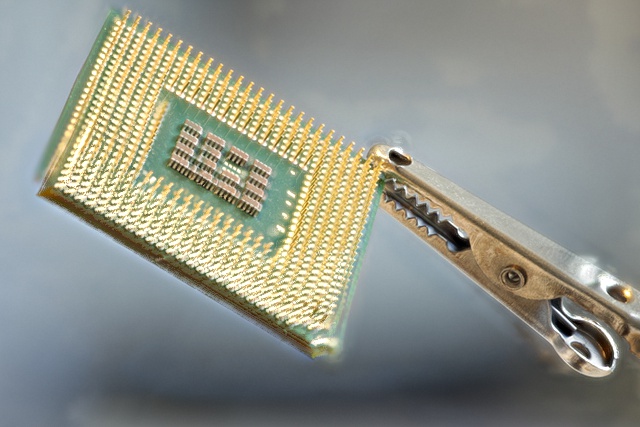

Focus stacking (also called focal stacking) takes as input multiple photographs of the same scene which have different camera focus parameters. As a result, the in-focus “sharpest” parts of each photo are different, even though the camera and scene remain stationary. This often cannot be avoided in certain applications, such as microscopy, where cameras are unable to keep the entire object (for example, a small insect) in focus. The goal of focus stacking is to output a single image that appears in-focus at every position. This is done by using algorithms that determine the most in-focus part of each input photo and combine these parts to produce the output image.

Alignment

Before images can be processed by any of the above algorithms, they must be aligned. This is because different focus distances bring an inherent “zoom” distortion to each input image. Alignment ensures that for every pixel coordinate, every input image is displaying the same position (and thus object) from the real world. Only then can the individual pixels of every input image be reliably compared. Bad alignment can result in “ghosting” in the combined output image. This is due to the same object being in different positions in each input image and thus appearing multiple times in the output image.

Ghosting in image result

We used the OpenCV Python library method cv2.findTransformECC() to perform the alignment (see the alignment Python files in our repository). The documentation for the original OpenCV version can be found here: https://docs.opencv.org/4.x/dc/d6b/group__video__track.html

We debugged the C++ code for the PetteriAimonen repository to find what is most important for achieving good alignment, since it already shows impressive alignment with the OpenCV library. We found that the MOTION_AFFINE mode in cv2.findTrasformECC() works best. Brightness and contrast post-processing are not significant when it comes to alignment. However, feeding the previous transformation matrix as the input array to every new findTransformECC() method call prevented different local minima from being reached, especially for the CPU data-set. Without this input, ghosting occurred as seen in the CPU image above.

One important point to keep in mind is that the input images should use as a reference orientation an image from the image set with the least amount of scene information. This ensures that other images will always contain features that can be aligned to this reference image. In our data-sets, this was done by choosing the image that was “zoomed in” the most due to focus distortion, made easier by the fact that our data-set was already sorted according to the focus distance.

Approaches

The crux of every approach is determining which image has the most in-focus pixel (i.e. color information) at each pixel coordinate, and writing the determined pixel at each coordinate to the output image. Thus the output image will be composed of only the pixels from the sharpest input images for each area. In general, in-focus pixel areas are characterized by carrying high-frequency information, i.e. from pixel to pixel there are sharp color changes. Compare to blurred parts of an image, where color information is spread out across neighboring pixels and is thus low-frequency in nature. How these “high frequency pixels” are found is what differentiates each algorithm…

Laplacian

The Laplacian algorithm uses the Laplacian kernel, which delivers the 2nd derivative at each pixel of an image. A higher absolute value corresponds to more extreme change and thus higher frequency. This is the fastest algorithm, taking only seconds. Other approaches take minutes to hours, depending on the image size.

More explanation…

[KERNEL IMAGE]

Real wavelet

The real wavelet algorithm makes use of wavelet functions with a range in real number space. Wavelet functions are interpreted as kernels which decompose an image into its high frequency and low frequency parts. For the simplest wavelet, the Haar wavelet, which we used, the low frequency decomposition image (called a “sub-band”) corresponds to a down-sampling of the image to halved dimensions. The high frequency decomposition contains 3 sub-band images corresponding to differences in the horizontal, vertical, and diagonal directions. All images in the input set are decomposed into these 4 low & high frequency sub-bands. The goal is to find the pixels in all four sub-bands that contain the strongest high frequency information. We do this by summing the absolute values of the 3 high frequency sub-bands at each pixel for each image, and finding which image has the largest sum at each pixel. A new set of 4 sub-bands is created for our output image, and for each pixel in these new sub-bands, we use the pixel from the corresponding 4 sub-bands of the input images that had the largest sum at that pixel. Thus every pixel coordinate in the new sub-bands comes from the best input image’s decomposition at that pixel, but different coordinates do not have to come from the same input image. The 4 sub-bands are then run through an inverse wavelet transform to produce a single new high-resolution image that is the sharpest everywhere.

We have only 4 sub-bands for each image because this is all done using a 1-level decomposition. More levels were found to produce blocky results (due to each decomposition halving the resolution). Interestingly, the complex wavelet approach did not have this problem (see below).

We used the PetteriAimonen focus-stack solution as a baseline for comparison (it uses its own alignment). It is available here: https://github.com/PetteriAimonen/focus-stack

[WAVELET IMAGE]

[PetteriAimonen Results]

[RESULTS]

Laplacian Pyramid

The Laplacian pyramid algorithm makes use of differences of Gaussian blurred images to find edges, and evaluates them at difference down-scaled resolutions for a multi-resolution frequency evaluation. We ported algorithm code into our repository from the repository at https://github.com/sjawhar/focus-stacking, which is an implementation based on the paper by Wang et al. We adjusted the algorithm to use our aligned images. Description…

Entropy and deviation from Wang et al…

[LAYERS IMAGE]

[RESULTS]

Complex wavelet

The complex wavelet decomposition produces both real and imaginary values. Thus they produce twice as many sub-bands as real wavelets and more information. This additional information contains finer directional frequency information — including separate bands for positive and negative change information [reference? https://www-sigproc.eng.cam.ac.uk/foswiki/pub/Main/NGK/SigProc_talk07.pdf] — and produces better results. We used a 7 level decomposition with the qshift wavelet, which is built into the Python dtcwt.Transform2d() library class. The complex wavelets apparently have enough information to prevent the blockiness seen with deeper real wavelet decompositions. We also used sub-band and spatial consistency checks as described in the complex wavelets paper by Forster et al.

For image sets of smaller images (<1 megapixel) we found that at least 5 decomposition levels of complex wavelets were needed to get good contrast in dark areas, with 7 levels being a sweet spot. For the 15 megapixel image sets, 7 levels proved to still not be enough to retain high contrast in the dark areas. We did not have time to run the algorithm with higher levels, but this is something to keep in mind when best results are desired.

More explanation…

[SUB-BANDS IMAGE]

[RESULTS]

Conclusions

We find the alignment provided by the OpenCV library is very effective with all algorithms. The parameters used can be seen in our GitHub repository. More work could be done for determining processing order for image sets that are not already sorted in an ascending or descending focus distance order.

The Laplacian provides a simple and fast edge detection algorithm with vibrant colors but less resolution. We think this is because difference information usually is positive on one side of an edge and negative on the other side, resulting in both pixels being recognized as an edge.

The real wavelet records finer details, but appears somewhat grainy. We think this is because the sum of all 3 high frequency sub-bands is compared for each pixel, so the direction of frequency change is not being considered when comparing neighboring pixels. This can result in inconsistencies when more than one sub-band has significant changes from pixel to pixel.

The Laplacian Pyramid produces high resolution and smooth color results, but is subject to slightly over-saturated colors and a “blooming” overexposure effect. This can obscure details. We think this is because comparing the differences of a discrete number of layers cannot retain the highest frequency information. Nevertheless, this approach produces results that are pleasing to the eye.

The complex wavelet produces very high quality results comparable to the Laplacian pyramid but without the overexposed lighting effect. We think this is a good solution for retaining details for scientific purposes. The choice of decomposition level still needs to be investigated, as any level 5 through 11 can produce slightly better results (smoother colors) depending on the (low-resolution) data-set used. As can be seen in our repository, this algorithm offers a superior solution while not being difficult to code from scratch.

Further possible work

More efficient code could be possible for the algorithms. We implemented the algorithms to use as much similar code as possible, to ensure fair comparisons. For example, the complex wavelet algorithm converts its decompositions into the same structure we used for real wavelets when comparing pixels. We added garbage collection after many intensive operations to save memory, but still had memory crashes on a 16gb machine with an Intel i7-4790k (specifically on the big version of the clock dataset after decomposing 79 of the 500 images for sub-band comparisons). This can be looked at more closely to make the algorithms more efficient, perhaps by performing the calculations piece-wise.

On high resolution images, using higher decomposition levels for the complex wavelet can produce results with better contrast and more definition. We believe with sufficient computing power and/or time, this will produce results that are superior to the Laplacian pyramid in every way, similar to what was seen on the low resolution results.

More research can be done with consistency checks and edge detection metrics. For example, deviation and entropy (used in the Laplacian pyramid) could possibly be applied to complex wavelet pixel decisions. The paper on real wavelets by Pajares et al describes several selection methods, such as the coefficient grouping method, adaptive weighted averages, and variance area based selection criteria. These could possibly be applied to any of the discussed algorithms.

Code & Data-sets

https://github.com/compimg23/cifstack

We invite readers to try our program with the data-sets and view our results for some of the higher-resolution data-sets at the following link…

[Link]

Acknowledgements

We would like to thank Professor Ivo Ihrke, Dr. John Meshreki, and Mr. Syed Kazim for their support and advice on this project.

Bibliography

- Laplacian paper

- Real wavelet paper

- Laplacian pyramid paper

- Complex wavelet paper

End.

Aktualisiert um 10:48 AM am 2024-03-21 von gl198